A Brief Colonial History Of Ceylon(SriLanka)

Sri Lanka: One Island Two Nations

A Brief Colonial History Of Ceylon(SriLanka)

Sri Lanka: One Island Two Nations

(Full Story)

Search This Blog

Back to 500BC.

==========================

Thiranjala Weerasinghe sj.- One Island Two Nations

?????????????????????????????????????????????????Sunday, July 8, 2018

Politics of Attitude: What is the truth?

We practice several intellectually devious approaches to justify our views and prove ourselves right in our pursuit of truth.

All control, in essence, is about who controls the truth.

― Joseph Rain, The Unfinished Book About Who We Are

― Joseph Rain, The Unfinished Book About Who We Are

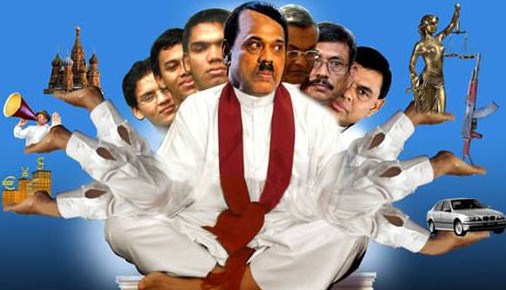

( July 6, 2018, Montreal, Sri Lanka Guardian) Recently, there was a furore in the Sri Lankan Parliament about a contentious article published in The New York Times of

25 June 2018 titled “How China Got Sri Lanka to Cough Up a Port”. It

claimed that: “Every time Sri Lanka’s president, Mahinda Rajapaksa,

turned to his Chinese allies for loans and assistance with an ambitious

port project, the answer was yes”; and followed after a few sentences

with the conclusion that: “And then the port became China’s”. The Sri

Lankan news portal Ada Derana reported

that the Chinese Embassy in Colombo issued a response to the article

saying that: “The Embassy has noticed the New York Times’ article

published on June 25, as well as the clarifications and responses by

various parties from Sri Lanka, criticizing it full of political

prejudice and completely inconsistent with the fact”. Ada Derana quoted

the Embassy as also saying: “China has always been pursuing a friendly

that policy toward Sri Lanka, firmly supporting the latter’s

independence, sovereignty, and territorial integrity, and opposing any

country’s interference in the internal affairs of Sri Lanka”.

This article is not about the credibility or authenticity of the article

in question. Instead, it will discuss some psychological dimensions

that have steered politics from being ideological to attitudinal. To

accomplish this, one has to determine what is the truth, and in turn,

delve into some psycho sociological factors.

Acclaimed cognitive neuroscience academic Tali Sharot, in her book The Influential Mind: What the Brain Reveals About Our Power to Change Others says: “Seeking out and interpreting data in a way that strengthens our preestablished opinions is known as the confirmation bias” – a

term the author uses to explain that some reject evidence that do not

fit their pre-conceived notions and opinions. An example given is how

people with strong analytical skills could spin and make data emollient

to justify an argument could use such data to convince those with a

mindset that could be easily receptive towards the data presented.

There are many election campaigns around the world where this phenomenon

worked to favour one candidate over another in a tendentious and often

disingenuous manner. Inherent to this phenomenon is the nature of the

human brain that impels us not so much to uncover the truth but to prove

to others that we are right. Being burdened with a legal education,

the author of this article is drawn towards wondering whether this is

the reason there are two types of administering justice – the

inquisitorial system where the primary objective is to determine the

truth; or the adversarial system of justice where the key driver is to

have two parties arguing with each other as to who is right.

The recently highlighted phenomenon of Fake News comes

into focus here. Politicians say what comes to their mouths and people

believe them without question. When they are found out to have uttered

falsehoods, the believers stick to their belief because they do not

want to change their minds. It would be too inconvenient and

cumbersome. Charles J. Sykes in his book How the Right Lost its Mind says:

“This raises the question, why are so many people willing to believe

fake news? The answer is deceptively simple – they believed fake news

because they wanted to and because it was easy”. Sykes goes on to say:

“many voters use information not to discover what is true, but rather to

reinforce their relationship to their group or their tribe. They use

reason to confirm or justify the outcome they want”.

Even way before the social media or tech marvels that encroached into

our collective thinking, and even in the earlier generations, our brains

were wired to think in terms of the confirmation bias and the

compelling urge to influence others with our thinking. The human brain

has been wired to think on a model that has been evolved over

centuries. This is not merely a spontaneous reaction and awakening to

innovative technology or social networking. Our political thinking and

social mores has been evolving agelessly. Our brains have been gaining

gradual manipulative power over millions of years. What we want to

believe is what we will strive to prove no matter what. One wonders how

this progression pairs off with what the great Einstein said: “There is

nothing called right or wrong: only what works and what doesn’t work”.

But one qualification – Einstein did not add the words after his

statement: “for us”.

Daniel Kahneman, Nobel Laureate, talks of a phenomenon called “cognitive

ease” – which is a process where humans instinctively avoid and resist

facts that are calculated to make them think harder. This makes them

accept information that may support their thinking and confirms their

argument. In other words, the “confirmation bias” in practice.

We practice several intellectually devious approaches to justify our

views and prove ourselves right in our pursuit of truth. Perhaps the

answer lies with Daniel Kahneman, in his book Thinking, Fast and Slow, who calls this mental process Intuitive Heuristics,

where most people, who are naturally rational thinkers, depart from

their reality when influenced by fear, bias or their upbringing and

beliefs. Kahneman says that the essence of intuitive heuristics can be

clearly seen in instances when a person, faced with a difficult

question, often answers an easier one, usually without noticing the

substitution. In other words, intense concentration on a subjective

position makes one socially and rationally blind.

As Einstein said: “Whoever is careless in the truth in small matters cannot be trusted with important matters|”.